New Computing: Digest #1

Magic stones, Tony Stark's workshop, and the will to computing

In the style of Kat Zhang’s Nodal Points Digest, I’d like to assemble a list of nodal points, pivot points for my interest, that have been incredibly fascinating to me over the last few weeks.

I notice that if I don’t actually cycle them out through writing, I begin to cling to ideas, models, and frameworks even as they stale. I would like to have Cool Ideas, and sometimes it is more important to me to Have The Cool Ideas than for the ideas to be actually interesting or useful. So it’s worth processing how my models have evolved over the last few weeks.

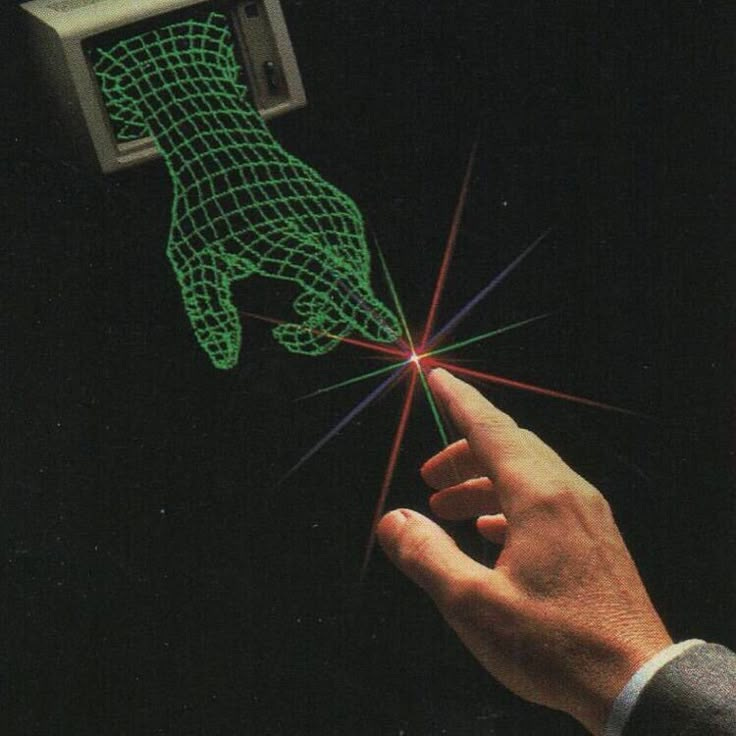

Nodal Point I: The allure of magic stones

Not sure if this is actually stale, but I keep saying that I want a magic stones computing future, where I talk to my magic stones and they tell me things. I like this because it addresses a few concerns:

1) I would like to stay in touch with the outside world. I feel it is unhealthy and limiting that we have to stay locked in staring at two-dimensional screens to use computers.

2) I am uncomfortable with idea of merging with AI, giving it so much of my data that it knows me better than I know myself. I don’t want to “lose myself.”

3) I am also uncomfortable with the idea of giving some corporation a fuckton of information about me. Imagine that the substrate for the transcendence of humans into post-human symbiote is controlled entirely by OpenAnthropoGoogSoftAI.

But I like to give data to corporations

I feel I’ve outgrown this for a few reasons. The first is that my revealed preference is actually to give so much fucking information to corporations and to intelligent models, lmao. Every single day I dump megabytes of text, code, and images into ChatGPT, Gemini, Claude, and Cursor. The thing that I am actually most frustrated by when I use these tools is that they don’t work better, that they’re slower, that the AI doesn’t understand me well enough. I want it to know me better, because then I can do cooler things.

If one corporation has a lot of power, can we trust it to do so responsibly? Do I really care about that idea? I have surrendered my entire digital persona to Google and Apple etc. anyway. I am generally happy living under their benevolent dictatorship.

So idk how to balance these things. AI is the technology of centralization, says Peter Thiel. Maybe this is true, or maybe DeepSeek proves that RL will enable us to have many local models that are as powerful as big ones.

maybe it’s a d/acc future?

Maybe the way forward is a d/acc future, built on defensive tech like blockchain. But that feels… idk, unlikely. It seems the arc of the universe bends toward super-corporations. Maybe that’s just fatalism - the internet was a pretty incredible decentralizing technology (actually a protocol!).

But I’m skeptical because I believe that technology needs to be fundamentally more useful for adoption, not just ideologically better for privacy or even embodiment. Crypto hasn’t been useful in the way that AI has.

Open-source software is a good example - in addition to the political benefits of democratization, the software is generally safer and bug-free because millions of engineers are auditing the code rather than just the several thousand at your company.

It would be great to record an episode of the podcast where someone pills me on the real likelihood of a d/acc future, and that decentralized applications of the technology will be better or more useful, not just ideologically private (nice-to-have). People, including me, will generally trade privacy for usefulness.

either way… we want embodied interfaces

The other reason why I am questioning the magic stones future is that I realize that though it seems like a waste to be “locked in” staring at screens all day, that’s not just unique to computing interfaces. As my friend Arjun described, we experience focus and flow when reading, when playing the piano, when meditating. There’s a place for trance states in the human experience.

The difference is… even trance states can be physical. I notice that flow state as a software engineer is quite a physical experience. I nod my head up and down, typing to a silent beat. I’ll tap out my build, run, and serve commands rhythmically, so I don’t miss any. I have to be rhythmic to be smooth. Smooth is quick and correct.

Wow. What does this look like when I’m not typing my code anymore? What does this look like when I am commanding fleets of agents?

What will work itself look like? What will we be doing?

We’ll be doing a lot of management, a lot of directing. LLMs will have to present us lots of context at different levels of complexity that we need to be able to zoom into quickly.

Probably one nice place for me to start is that I hate to read code. How can I make it easier to audit the things that my agents is doing? Could I have Claude Code generate a schema diagram, and prompt it by editing that schema diagram?

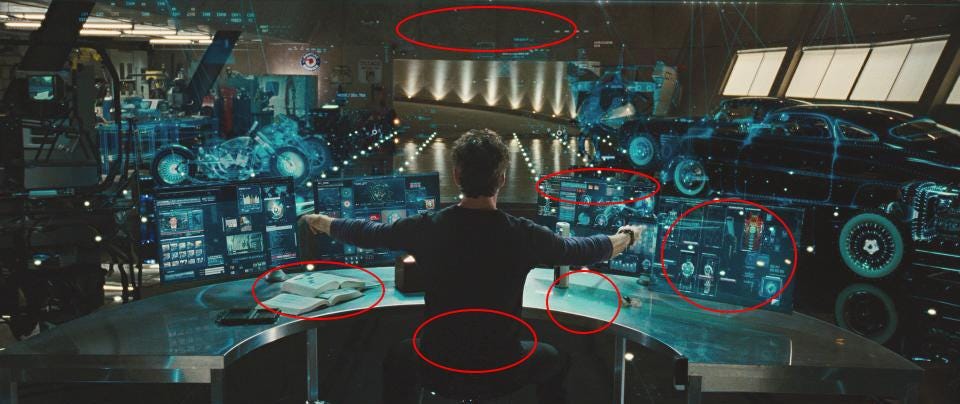

Nodal point II: the Tony Stark workshop

The Tony Stark workshop dream is actually going to be true. We’re going to be moving things around to grab pieces of context from knowledge bases, images on the internet, snippets from Obsidian, and talk to the AI to share intent. Then it’ll execute on that by building things.

New questions: How will we build things in the future? What will we want to build? Probably more software will be written. Who will write it?

To interview: Jake. Andrew.

Meta-node: will vs want

I would simply like to build the future and make it happen faster. It’s what I want. I want to build the best interface. What are the right questions for me to ask?

So far the two meta questions I’ve been asking have been: what will computers look like in 10 years? and what do I want computers to look like in 10 years? Will and want are two very different things.

Will has something to do with product-market fit. Will has something to do with gradient descent, local optimization. Will has something to do with revealed preference. What is the direction we are already headed in. I look around at the companies I’m working with - HDR, Sandbar, Plastic Labs. I look around at the future that others are creating. Will asks me to surrender to the flow of progress and time and ask, where is my place?

Want has something to do with agency. Want has something to do with recognizing how much power we have now to build and learn and shape the world, and dreaming bigger, and really imagining what the good shit really is that we can build. Want is recognizing that the flourishing futures will not happen by default, and working to help them happen.

I’m accepting recommendations for other meta-questions to ask.